// Week 12: Parallel Execution Plans

In this installment of the SQL Server Performance Tuning series I want to talk in more details about Parallel Execution Plans in SQL Server. Executing a query with a parallel execution plan means that multiple threads are used by SQL Server to perform the necessary operators from the execution plan. In the first step I will give you a general introduction to the most common operators used in a parallel execution plan, and afterwards we talk in more details about how SQL Server decides if a parallel plan make sense.

Parallel Operators

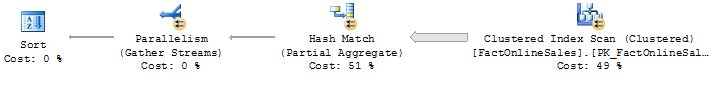

The most common misconception about parallel execution plans is that only a given set of threads (e.g. 8 threads on a 8-core machine) is used for the whole plan. That’s not really true, because SQL Server assign multiple worker threads to all the operators that are parallelism-aware. This means that a larger parallel plan can consume a huge amount of threads. SQL Server distinguishes between two kinds of operators in a parallel plan: parallelism-aware operators, and so-called Exchange Operators:

- A lot of the traditional operators are able to perform their work with multiple threads, they are parallelism-aware: Index Scan, Index Seek, Nested Loop, Hash Join, Sort, etc.

- Exchange Operators are used to distribute and merge rows between multiple threads in a parallel execution plan.

SQL Server implements the following 3 Exchange Operators:

- Distribute Streams: Used to transition from a single-threaded region to a multi-threaded region in a parallel plan

- Repartition Streams: Used to redistribute rows between threads (e.g. when the upfront operator is a parallel Hash Join)

- Gather Streams: Used to transition from a multi-threaded region to a single-threaded region in a parallel plan

When you look on a parallel execution plan you will always see a combination of both kinds of operators. Every execution plan must produce a single-threaded result, therefore you will always find at the end of a parallel execution plan a Gather Streams operator.

If the Query Optimizer produces a parallel plan also depends if you are using query constructs that prevents a parallel plan, like:

- T-SQL and SQLCLR UDFs

- Build-In functions like OBJECT_ID(), ERROR_NUMBER(), @@TRANCOUNT

There are also multiple query constructs which are forcing a serial zone in your parallel plan:

- System Table Scans

- Sequence Functions

- Backward Scans

- Recursive Queries

- TVFs

- TOP

The less serial zones you have in your parallel plan, the faster your query will be. Think about that the next time when you write your queries.

When to go parallel?

Every execution plan gets a so-called Cost Factor assigned by SQL Server. The cost factor is just a simple number that tells SQL Server, how expensive an execution plan is. The higher the number, the higher the associated costs for running that execution plan.

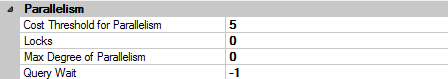

SQL Server has a configuration option called Cost-Threshold for Parallelism, which defines the cost factor at which the Query Optimizer also “thinks” about parallel plans. By default that configuration option is set to 5, means a query with a higher cost factor will be executed in parallel, as long as parallelism is possible.

When a parallel plan is compiled by the Query Optimizer, the option Max Degree of Parallelism (MAXDOP) defines how many threads are used for every parallel operator in the execution. As I have already said earlier, every operator within the parallel execution plan runs with multiple threads, not only the complete plan. Of course, threads can be shared and reused by upfront operators in the parallel execution plan. The following picture shows you the two configuration options on the SQL Server Instance level.

By default the MAXDOP option is set to 0. Therefore SQL Server will by default parallelize a query across all CPU cores that are assigned to SQL Server. This can lead to performance problems if you are dealing with a NUMA system (Non Uniform Memory Access). A good best practice is to limit the MAXDOP option to the number of cores that you have within one NUMA node (incl. cores that are coming from Hyperthreading). SQL Server will then also ensure that parallel plans stay within a NUMA node.

Summary

In this installment of the SQL Server Performance Tuning series I have talked about Parallel Execution Plans in SQL Server. You have learned about the various operators that are involved in parallel plans, and how you can configure parallelism in SQL Server. If you are interested in more details about parallel plans, I also highly suggest to read the following online articles about it:

- https://www.simple-talk.com/sql/learn-sql-server/understanding-and-using-parallelism-in-sql-server/

- http://sqlblog.com/blogs/adam_machanic/archive/2010/05/24/sql-university-parallelism-week-introduction.aspx

With this newsletter the 3nd month of the SQL Server Performance Tuning series is already over, and in the next month I will be talking about Statistics in SQL Server. Stay tuned!